ViM: Out-Of-Distribution with Virtual-logit Matching

SenseTime Group Limited

Abstract

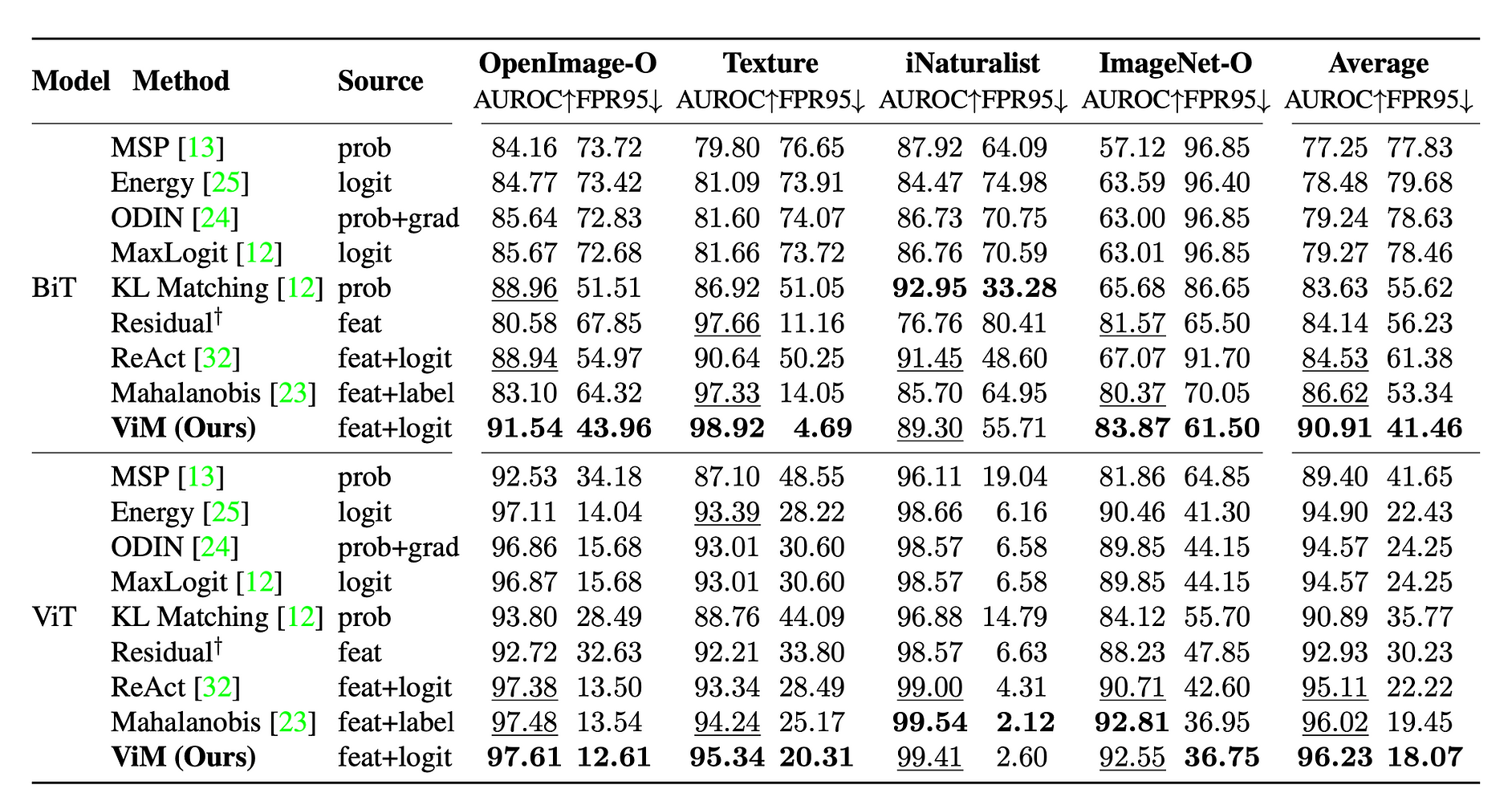

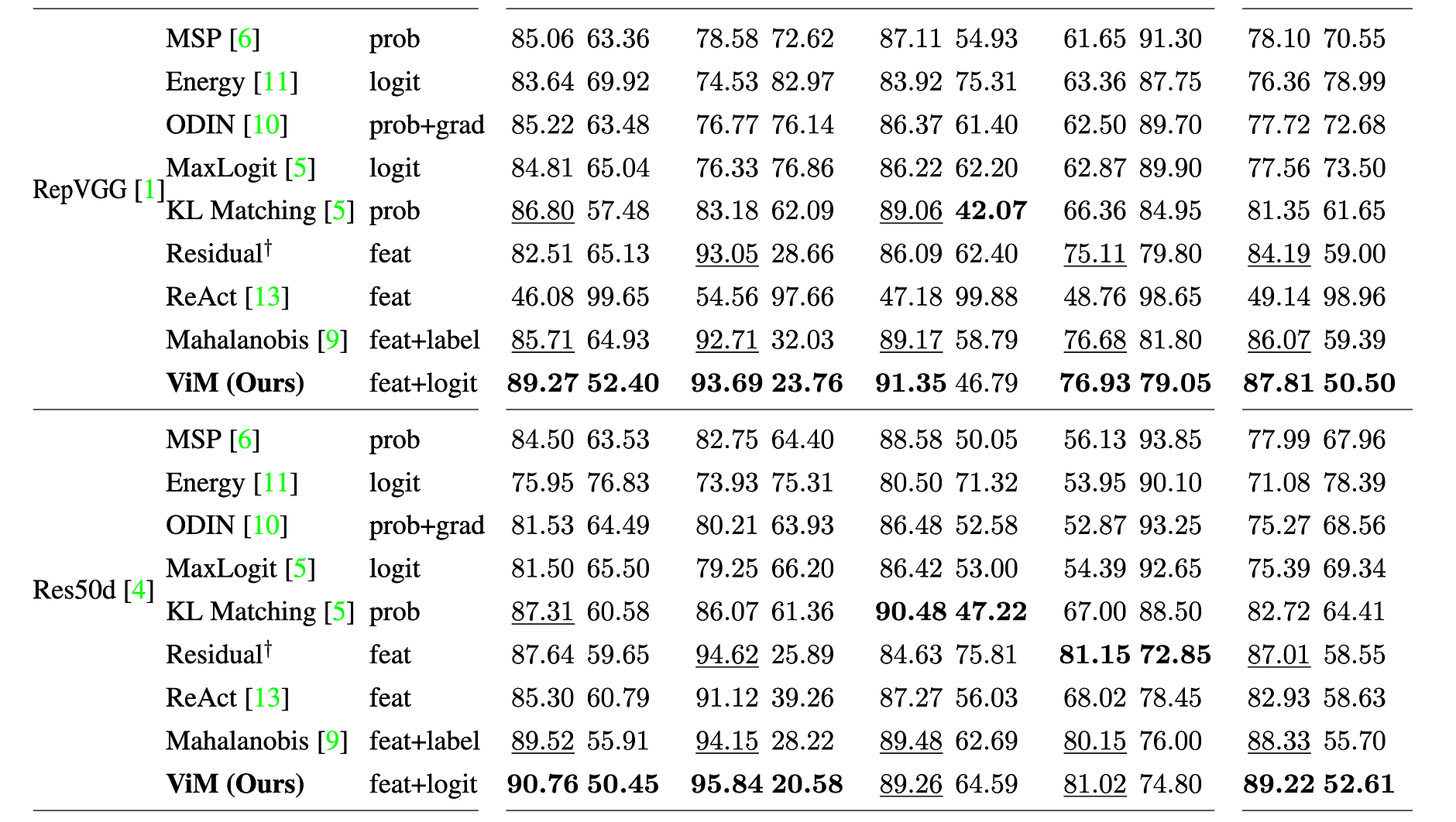

Most of the existing Out-Of-Distribution (OOD) detection algorithms depend on single input source: the feature, the logit, or the softmax probability. However, the immense diversity of the OOD examples makes such methods fragile. There are OOD datasets that are easier to identify in the feature space while hard to distinguish from logits and vice versa. Motivated by this observation, we propose a novel OOD scoring method named Virtual-logit Matching (ViM), which combines the class-agnostic score from feature space and the In-Distribution (ID) class-dependent logits. Specifically, an additional logit representing the virtual OOD class is generated from the residual of the feature against the principal space, and then matched with the original logits by a constant scaling. The probability of this virtual logit after softmax is the indicator of OOD-ness. To facilitate the evaluation of large-scale OOD detection in academia, we create a new OOD dataset for ImageNet-1K, which is human-annotated and is 8.8 times the size of existing datasets. We conducted extensive experiments, including CNNs and vision transformers, to demonstrate the effectiveness of the proposed ViM score. In particular, using the BiT-S model, our method gets an average AUROC 90.91% on four difficult OOD benchmarks, which is 4% ahead of the best baseline.

OpenImage-O

We build a new OOD dataset called OpenImage-O for the ID dataset ImageNet-1K. It is manually annotated, comes with a naturally diverse distribution, and has a large scale. It is built to overcome several shortcomings of existing OOD benchmarks. OpenImage-O is image-by-image filtered from the test set of OpenImage-V3, which has been collected from Flickr without a predefined list of class names or tags, leading to natural class statistics and avoiding an initial design bias. It can be downloaded here.

Method

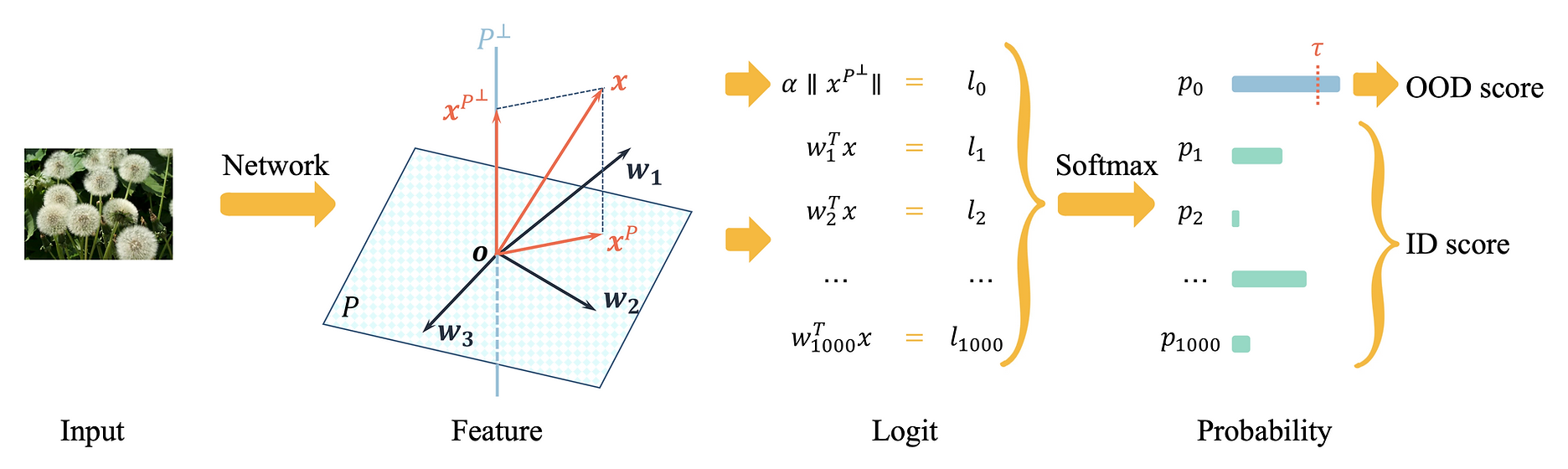

The pipeline of ViM. The principal space \(P\) and the matching constant \(\alpha\) are determined by the training set beforehand. In inference, feature \(\mathbf{x}\) is computed by the network, and the virtual logit \(\alpha\|\mathbf{x}^{P^\perp}\|\) is computed by projection and scaling.

After softmax, the probability corresponding to the virtual logit is the OOD score.

It is OOD if the score is larger than threshold \(\tau\).

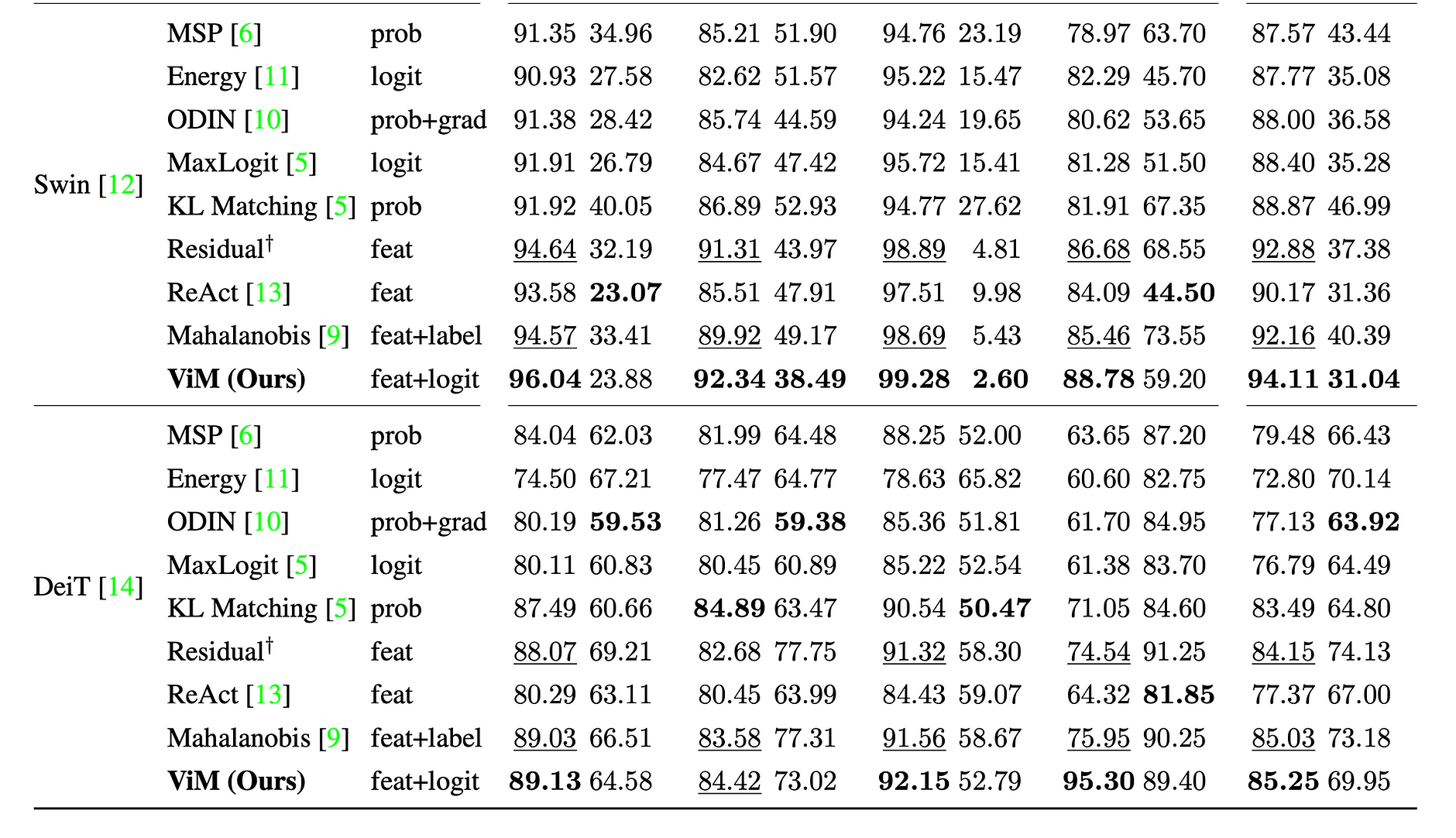

Result

Material

Citation

@inproceedings{haoqi2022vim,

title = {ViM: Out-Of-Distribution with Virtual-logit Matching},

author = {Wang, Haoqi and Li, Zhizhong and Feng, Litong and Zhang, Wayne},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year = {2022}

}

Related Work

Semantically Coherent Out-of-Distribution Detection: addressing out-of-distribution detection problem in terms of the difference in semantics instead of datasets.